The field of AI-driven video generation has witnessed remarkable advances in recent years, with motion modeling emerging as one of the most critical and challenging aspects of creating temporally coherent, physically plausible video sequences. This comprehensive review synthesizes findings from recent publications in computer vision, machine learning, and computational physics to examine how modern generative models learn and represent motion dynamics.

Understanding motion representation in neural architectures requires examining multiple interconnected domains: optical flow estimation, temporal consistency mechanisms, physics-informed constraints, and novel training paradigms. Each of these areas contributes essential components to the broader challenge of generating realistic video content that maintains both spatial fidelity and temporal coherence across extended sequences.

Optical Flow Integration in Neural Video Architectures

Optical flow—the pattern of apparent motion of objects in visual scenes—serves as a fundamental building block for motion understanding in video generation systems. Recent research has demonstrated that explicit optical flow integration significantly improves temporal consistency compared to purely implicit motion representations learned through standard convolutional or attention mechanisms.

Contemporary approaches to optical flow integration can be categorized into three primary methodologies: pre-computed flow guidance, end-to-end differentiable flow estimation, and hybrid architectures that combine both strategies. Pre-computed flow methods leverage established optical flow algorithms such as RAFT (Recurrent All-Pairs Field Transforms) or PWC-Net to generate motion fields that guide the generation process. These approaches benefit from the robustness of specialized flow estimation networks but introduce computational overhead and potential error propagation.

Differentiable Flow Estimation Modules

End-to-end differentiable flow estimation represents a more integrated approach, where flow computation modules are embedded directly within the generative architecture. This strategy enables joint optimization of motion estimation and video synthesis objectives, allowing the model to learn task-specific flow representations that may deviate from traditional optical flow definitions when beneficial for generation quality.

Recent work has explored lightweight flow estimation modules specifically designed for integration with diffusion models and autoregressive video generators. These modules typically employ pyramid-based architectures with coarse-to-fine refinement, balancing computational efficiency with estimation accuracy. The key innovation lies in designing flow modules that can backpropagate gradients effectively while maintaining real-time or near-real-time inference speeds.

Key Insight:Hybrid architectures that combine pre-computed flow for initialization with differentiable refinement modules have shown superior performance in maintaining long-term temporal consistency while adapting to domain-specific motion patterns. This approach leverages the strengths of both methodologies while mitigating their individual limitations.

Physics-Informed Neural Architectures for Motion Modeling

The integration of physical principles into neural video generation represents a paradigm shift from purely data-driven approaches toward models that incorporate domain knowledge about real-world motion dynamics. Physics-informed neural networks (PINNs) for video generation embed conservation laws, kinematic constraints, and dynamical equations directly into the learning process, resulting in more physically plausible motion patterns.

Conservation Law Constraints

Fundamental conservation laws—conservation of mass, momentum, and energy—provide powerful constraints for motion modeling. Recent architectures incorporate these principles through specialized loss functions and architectural inductive biases. For instance, divergence-free flow fields can be enforced through projection layers that decompose predicted motion into curl and divergence components, explicitly penalizing non-physical divergence patterns.

Mass conservation constraints are particularly relevant for fluid dynamics and deformable object motion. Neural architectures can enforce approximate mass conservation by ensuring that the spatial integral of density fields remains constant across time steps, or by constraining flow fields to satisfy continuity equations. These constraints significantly improve the realism of generated videos featuring fluid motion, smoke, or other continuous media.

Lagrangian and Eulerian Perspectives

Motion representation in video generation can adopt either Lagrangian (particle-based) or Eulerian (grid-based) perspectives, each offering distinct advantages. Lagrangian approaches track individual particles or features through time, naturally preserving object identity and enabling long-term correspondence. Eulerian methods represent motion as velocity fields defined on fixed spatial grids, facilitating efficient computation and integration with convolutional architectures.

Hybrid Lagrangian-Eulerian architectures have emerged as a promising direction, combining particle tracking for salient objects with grid-based representations for background motion and atmospheric effects. These architectures typically employ attention mechanisms to dynamically allocate computational resources between particle and grid representations based on scene content.

Novel Training Objectives for Temporal Coherence

Training objectives play a crucial role in shaping the motion characteristics learned by video generation models. Beyond standard reconstruction losses, recent research has introduced specialized objectives targeting temporal consistency, motion smoothness, and long-range coherence. These objectives address fundamental challenges in video generation, including temporal flickering, motion discontinuities, and drift in extended sequences.

Perceptual Temporal Loss Functions

Perceptual loss functions, which compare high-level feature representations rather than raw pixel values, have proven highly effective for image generation. Extending these concepts to video requires temporal perceptual losses that capture motion patterns and temporal consistency. Recent approaches employ pre-trained video understanding networks (such as I3D or SlowFast) to extract spatio-temporal features, computing perceptual distances in this learned feature space.

Where φ represents a pre-trained spatio-temporal feature extractor, x denotes ground truth frames, and ŷ represents generated frames. This formulation encourages the model to match not just individual frame appearance but also temporal transitions and motion patterns present in real video data.

Adversarial Training for Motion Realism

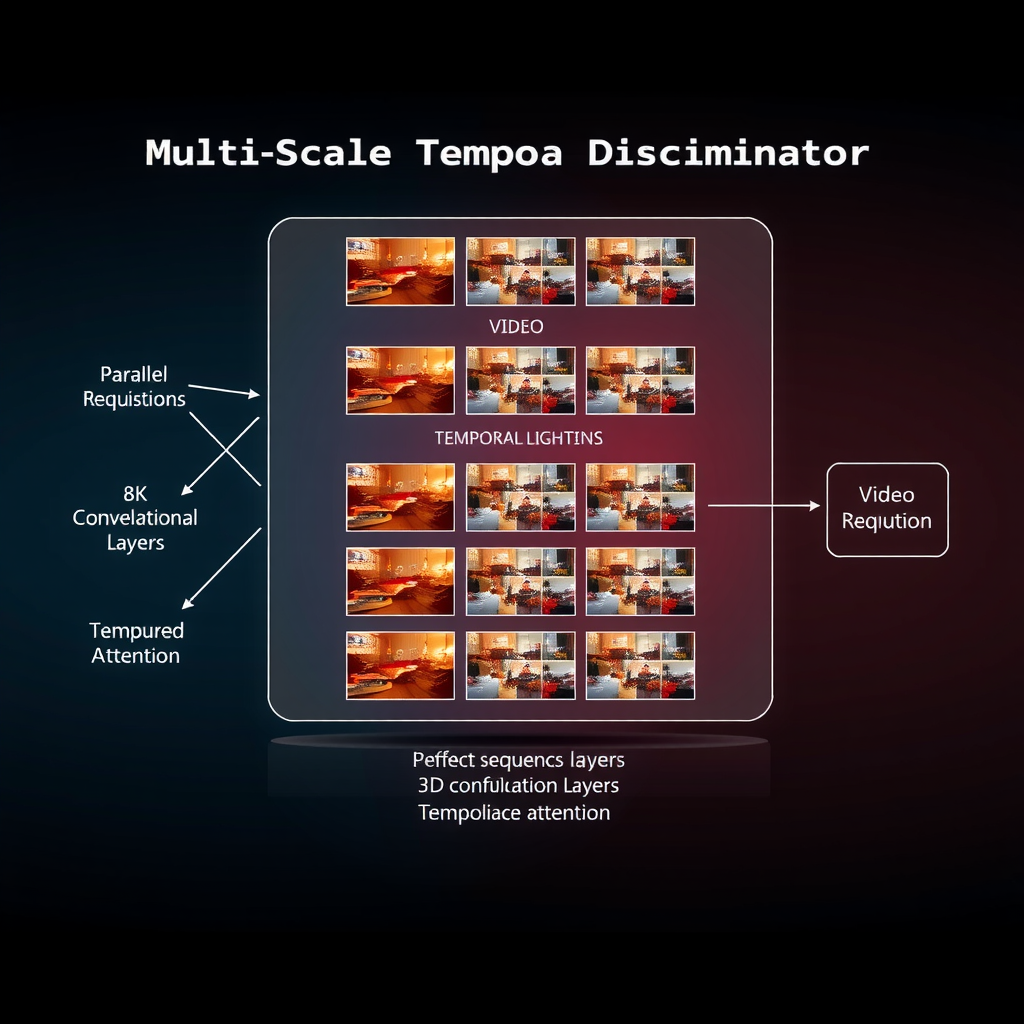

Generative adversarial networks (GANs) have been adapted for video generation through temporal discriminators that assess motion realism. Unlike spatial discriminators that evaluate individual frames, temporal discriminators analyze frame sequences to detect unrealistic motion patterns, temporal inconsistencies, and artifacts specific to generated video.

Multi-scale temporal discriminators represent a particularly effective architecture, evaluating motion patterns at multiple temporal resolutions simultaneously. This approach enables the model to learn both fine-grained motion details (frame-to-frame transitions) and coarse temporal structure (long-term motion trajectories). The discriminator architecture typically employs 3D convolutions or temporal attention mechanisms to capture motion information across multiple frames.

Attention Mechanisms for Long-Range Temporal Dependencies

Capturing long-range temporal dependencies remains one of the most challenging aspects of video generation. While convolutional architectures excel at modeling local spatial and temporal patterns, they struggle with dependencies spanning many frames. Attention mechanisms, particularly transformer-based architectures, have emerged as powerful tools for modeling these long-range relationships.

Spatio-Temporal Attention Architectures

Spatio-temporal attention mechanisms extend standard self-attention to jointly model spatial and temporal relationships. Factorized attention designs, which separate spatial and temporal attention operations, offer computational advantages while maintaining modeling capacity. These architectures typically alternate between spatial attention layers (attending across pixels within frames) and temporal attention layers (attending across frames at fixed spatial locations).

Recent innovations include axial attention patterns that further decompose spatial attention into row and column operations, and window-based attention schemes that limit attention computation to local spatio-temporal neighborhoods. These optimizations enable scaling to higher resolutions and longer sequences while maintaining tractable computational requirements.

Memory-Augmented Architectures

Memory-augmented neural networks provide an alternative approach to modeling long-range dependencies by maintaining explicit memory banks of past observations. These architectures store compressed representations of previous frames in external memory modules, which can be queried during generation to retrieve relevant historical context. This approach proves particularly effective for maintaining consistency of object appearances and motion patterns across extended sequences.

Differentiable memory addressing mechanisms, such as content-based attention over memory slots, enable end-to-end training of memory-augmented video generators. The model learns both what information to store in memory and how to retrieve and utilize this information during generation, adapting the memory strategy to task-specific requirements.

Diffusion Models for Video Generation

Diffusion models have recently achieved state-of-the-art results in image generation and are increasingly being adapted for video synthesis. These models learn to gradually denoise random noise into coherent video sequences through iterative refinement. The diffusion process naturally incorporates temporal consistency through the denoising trajectory, but careful architectural design is required to fully leverage this property.

Temporal Conditioning in Diffusion Models

Effective temporal conditioning mechanisms are essential for video diffusion models. Recent approaches incorporate temporal information through multiple channels: explicit frame indices or timestamps, learned temporal embeddings, and conditioning on previous frames or optical flow. These conditioning signals guide the denoising process to generate temporally coherent sequences rather than independent frames.

Cascaded diffusion architectures, which generate video at progressively increasing resolutions and frame rates, have shown particular promise. These models first generate low-resolution keyframes, then use super-resolution and frame interpolation diffusion models to refine spatial and temporal resolution. This coarse-to-fine approach naturally enforces temporal consistency by conditioning high-resolution generation on consistent low-resolution structure.

Score-Based Motion Modeling

Score-based generative models, which learn the gradient of the data distribution (the score function), provide a principled framework for motion modeling. By learning score functions in both spatial and temporal dimensions, these models can generate video by following the gradient flow of the learned distribution. This approach naturally incorporates motion dynamics through the temporal component of the score function.

Recent theoretical work has established connections between score-based models and optimal transport theory, providing insights into how these models learn motion representations. The score function can be interpreted as defining a velocity field that transports noise distributions to data distributions, with the temporal component encoding motion dynamics in the generated video.

Evaluation Metrics for Motion Quality

Assessing motion quality in generated video requires metrics that capture temporal consistency, motion realism, and physical plausibility. Traditional metrics like Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) focus primarily on spatial quality and fail to adequately measure temporal artifacts. Recent research has developed specialized metrics for evaluating motion characteristics.

Temporal Consistency Metrics

Temporal consistency metrics quantify frame-to-frame coherence and the absence of flickering or sudden discontinuities. Optical flow-based metrics compute the consistency of estimated flow fields across generated sequences, penalizing abrupt changes in motion patterns. Warping error metrics assess how well frames can be predicted from previous frames using estimated optical flow, providing a direct measure of temporal predictability.

Perceptual temporal metrics leverage pre-trained video understanding networks to compute feature-space distances between consecutive frames. These metrics better align with human perception of temporal artifacts compared to pixel-space metrics, as they capture high-level motion patterns and semantic consistency rather than low-level pixel fluctuations.

Motion Realism Assessment

Assessing motion realism requires comparing statistical properties of generated motion against real-world motion distributions. Frequency domain analysis of motion patterns can reveal unnatural periodicities or spectral characteristics that distinguish generated from real video. Motion trajectory analysis examines the smoothness and physical plausibility of object paths through time, detecting violations of kinematic constraints.

User studies remain an important component of motion quality evaluation, as human perception is ultimately the target for video generation systems. Recent work has developed standardized protocols for perceptual evaluation of video generation, including paired comparison tasks and absolute quality rating scales specifically designed to assess temporal artifacts and motion realism.

Future Directions and Open Challenges

Despite significant progress, numerous challenges remain in motion modeling for video generation. Generating long, temporally consistent sequences (minutes rather than seconds) remains computationally prohibitive with current architectures. Scaling to high resolutions while maintaining temporal consistency requires innovations in both model architecture and training methodology.

Controllable motion generation—enabling users to specify desired motion patterns through intuitive interfaces—represents another important research direction. This includes trajectory-based control, where users sketch desired object paths, and physics-based control, where users specify forces or constraints that govern motion dynamics. Developing architectures that can flexibly incorporate diverse control signals while maintaining generation quality poses significant technical challenges.

Research Opportunity:The integration of learned motion priors with explicit physical simulation represents a promising frontier. Hybrid approaches that combine neural generation with differentiable physics engines could enable unprecedented control over motion characteristics while ensuring physical plausibility. This direction requires advances in differentiable simulation, neural-symbolic integration, and efficient optimization algorithms.

Generalization across domains and motion types remains a fundamental challenge. Models trained on specific video domains (e.g., human motion, natural scenes) often fail to generalize to novel motion patterns or object types. Developing architectures with stronger inductive biases toward physical principles and motion universals could improve cross-domain generalization. Meta-learning approaches that enable rapid adaptation to new motion domains with limited data represent another promising direction.

Conclusion

Motion modeling in AI video generation has evolved from simple frame interpolation to sophisticated architectures that integrate optical flow, physical principles, and learned temporal representations. Recent advances in diffusion models, attention mechanisms, and physics-informed architectures have significantly improved the temporal consistency and motion realism of generated video.

The field continues to progress rapidly, with ongoing research addressing fundamental challenges in long-term consistency, computational efficiency, and controllable generation. As these challenges are overcome, AI video generation systems will increasingly enable applications in content creation, scientific visualization, simulation, and beyond. The theoretical foundations established through current research—particularly the integration of physical principles with learned representations—will guide the development of next-generation video generation systems capable of producing extended, high-fidelity sequences with unprecedented control over motion dynamics.

For graduate students and researchers entering this field, understanding the interplay between optical flow estimation, physics-informed constraints, and novel training objectives provides essential foundation for contributing to ongoing advances. The synthesis of computer vision, machine learning, and computational physics perspectives offers rich opportunities for interdisciplinary research that pushes the boundaries of what is possible in generative video systems.