Video diffusion models have revolutionized the field of generative AI, enabling unprecedented control over synthetic video creation. At the heart of these powerful systems lies the concept oflatent representations—compressed, high-dimensional encodings that capture the essential features of video content. This comprehensive guide explores how researchers and practitioners can understand, manipulate, and leverage these latent spaces for controllable video generation.

The Foundation: What Are Latent Representations?

In video diffusion models, latent representations serve as compressed encodings of video data that preserve essential information while dramatically reducing computational requirements. Unlike raw pixel space, which contains redundant information and high dimensionality, latent space provides a more efficient and semantically meaningful representation of video content.

The transformation from pixel space to latent space occurs through avariational autoencoder (VAE)or similar encoder architecture. This encoder learns to compress video frames into a lower-dimensional representation while preserving the information necessary for high-quality reconstruction. The dimensionality reduction typically ranges from 4x to 8x per spatial dimension, resulting in significant computational savings during the diffusion process.

Key Properties of Latent Spaces

Understanding the fundamental properties of latent spaces is crucial for effective manipulation:

- Continuity:Similar videos map to nearby points in latent space, enabling smooth interpolation between different video concepts

- Disentanglement:Different dimensions or regions of latent space encode distinct semantic features such as motion, appearance, and temporal dynamics

- Hierarchical Structure:Latent representations often exhibit hierarchical organization, with coarse features encoded in certain dimensions and fine details in others

- Temporal Coherence:Video latent spaces maintain temporal relationships between frames, ensuring consistent motion and appearance across time

Technical Insight

The latent space dimensionality in Stable Video Diffusion typically operates at a spatial resolution of H/8 × W/8 with 4 channels, where H and W represent the original video height and width. This 64x compression ratio enables efficient processing while maintaining high-quality generation capabilities.

Dimensionality Reduction Approaches

While latent spaces are already compressed compared to pixel space, further dimensionality reduction can provide valuable insights into the structure and organization of learned representations. Several approaches have proven effective for analyzing and visualizing video diffusion latent spaces.

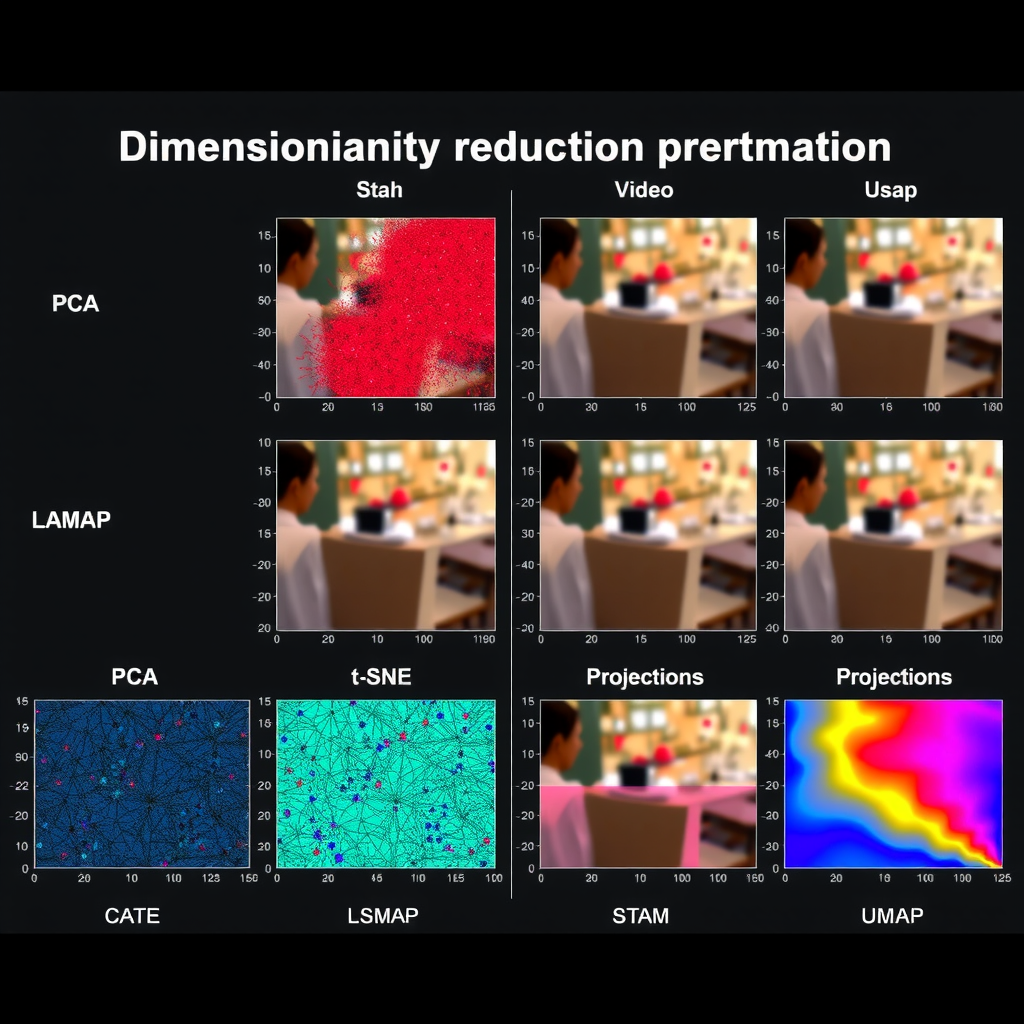

Principal Component Analysis (PCA)

PCA remains one of the most fundamental and interpretable dimensionality reduction techniques for latent space analysis. By identifying the directions of maximum variance in the latent space, PCA reveals the principal axes along which video representations vary most significantly.

In video diffusion models, the first few principal components often correspond to high-level semantic features such as overall scene composition, dominant motion patterns, and color schemes. Researchers have found that manipulating these principal components enables coarse-grained control over generated videos while maintaining temporal coherence.

Implementation Considerations

When applying PCA to video latent representations, several practical considerations emerge:

- Standardization of latent vectors before PCA ensures that all dimensions contribute equally to the analysis

- The number of components to retain depends on the desired level of detail and the explained variance ratio

- Temporal dimensions should be handled carefully to preserve motion information during reduction

- Batch processing of large video datasets requires efficient memory management and incremental PCA techniques

Non-Linear Dimensionality Reduction

While PCA provides linear projections, non-linear techniques such as t-SNE and UMAP can reveal more complex structures in latent space. These methods are particularly valuable for visualizing how different video concepts cluster and relate to each other in the high-dimensional latent space.

t-SNE (t-Distributed Stochastic Neighbor Embedding)excels at preserving local structure, making it ideal for identifying clusters of similar videos. However, it can distort global relationships and is computationally intensive for large datasets.

UMAP (Uniform Manifold Approximation and Projection)offers a balance between local and global structure preservation while being more computationally efficient than t-SNE. Recent research has shown that UMAP projections of video latent spaces reveal meaningful semantic clusters corresponding to different motion types, scene categories, and visual styles.

H × W × T × 3

Compression

H/8 × W/8 × T × 4

Analysis

Visualization

Interpolation Methods Between Video Concepts

One of the most powerful applications of understanding latent representations is the ability to smoothly interpolate between different video concepts. This capability enables creative control over video generation and provides insights into how the model organizes semantic information.

Linear Interpolation (LERP)

The simplest interpolation method involves linear interpolation between two latent vectors. Given source latent z₁ and target latent z₂, intermediate latents are computed as:

While linear interpolation is computationally efficient and easy to implement, it can sometimes produce artifacts or semantically inconsistent intermediate videos, particularly when interpolating between very different concepts. This occurs because the straight-line path in latent space may pass through regions that don't correspond to realistic video content.

Spherical Linear Interpolation (SLERP)

Spherical linear interpolation addresses some limitations of LERP by interpolating along the great circle connecting two points on a hypersphere. This approach is particularly effective when latent vectors are normalized or when the latent space exhibits spherical geometry:

SLERP often produces smoother and more semantically consistent interpolations, especially for normalized latent representations. The method maintains constant "speed" through latent space, resulting in more uniform transitions between video concepts.

Geodesic Interpolation

For more sophisticated interpolation, geodesic paths through latent space can be computed using techniques from differential geometry. These paths follow the natural manifold structure of the latent space, potentially producing more realistic intermediate videos.

Recent research has explored using learned metrics to define geodesics that better respect the semantic structure of video latent spaces. These approaches train auxiliary networks to predict optimal interpolation paths based on perceptual similarity or other semantic criteria.

Temporal Consistency in Interpolation

When interpolating between video latents, maintaining temporal consistency across frames is crucial. Several strategies help ensure smooth motion and appearance transitions:

- Frame-wise interpolation:Interpolate corresponding frames independently while applying temporal smoothing

- Motion-aware interpolation:Account for optical flow and motion vectors during interpolation

- Hierarchical interpolation:Interpolate coarse features first, then refine with fine-grained details

- Attention-guided interpolation:Use attention mechanisms to identify and preserve important temporal relationships

Encoding Motion and Appearance Information

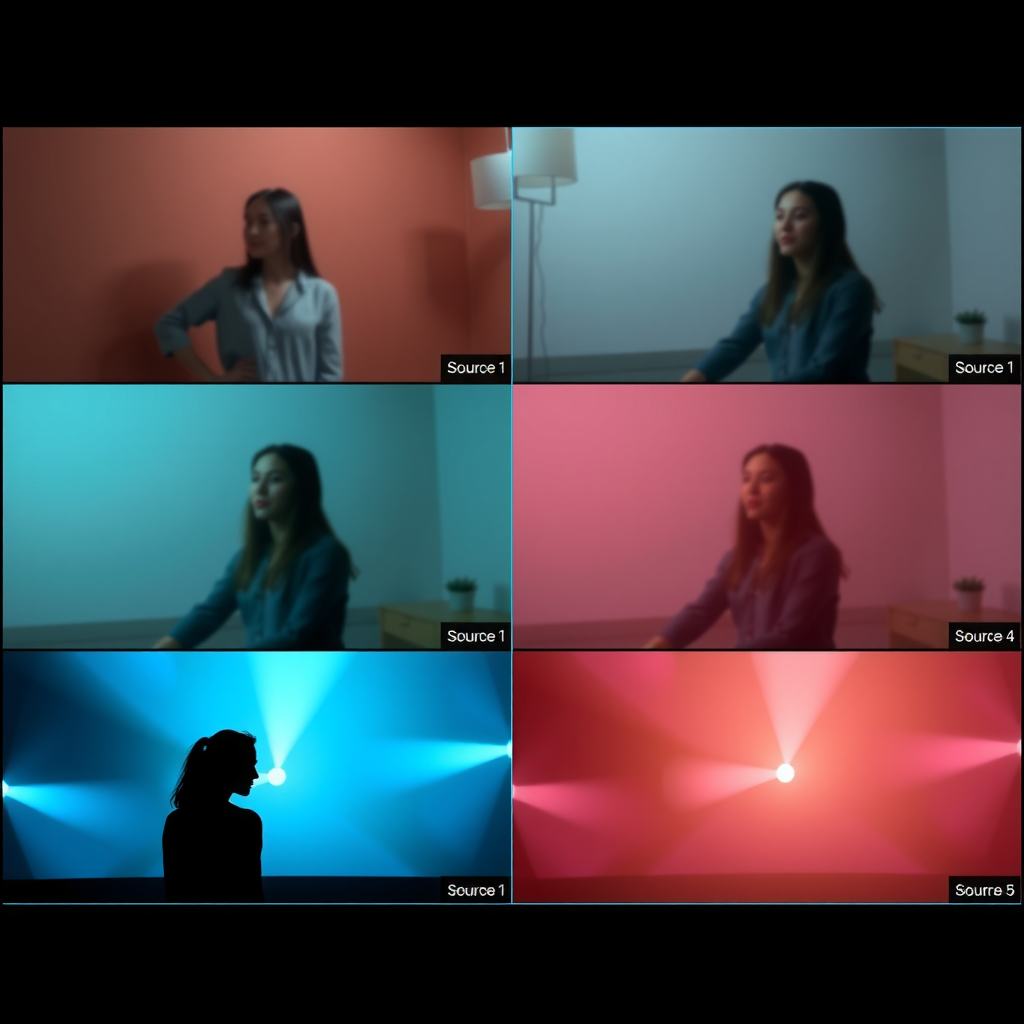

A critical aspect of video diffusion latent spaces is how they separately encode motion and appearance information. Understanding this disentanglement enables more precise control over video generation and manipulation.

Spatial vs. Temporal Channels

Video diffusion models typically organize latent representations with distinct spatial and temporal components. Spatial channels primarily encode appearance features such as textures, colors, and object shapes, while temporal channels capture motion patterns, dynamics, and frame-to-frame transitions.

Research has shown that certain latent dimensions exhibit strong correlations with specific types of motion (e.g., camera movement, object motion, deformation) or appearance attributes (e.g., lighting, style, object identity). This natural disentanglement can be leveraged for targeted manipulation.

Motion Vector Extraction

Extracting explicit motion information from latent representations enables applications such as motion transfer, motion editing, and motion-conditioned generation. Several approaches have been developed:

- Optical flow estimation:Compute optical flow in latent space to capture motion patterns

- Temporal difference analysis:Analyze frame-to-frame differences in latent space to identify motion-related dimensions

- Attention-based motion extraction:Use attention mechanisms to identify temporal dependencies and motion patterns

- Learned motion encoders:Train specialized networks to extract motion representations from video latents

Appearance Manipulation

Manipulating appearance while preserving motion requires careful identification of appearance-related latent dimensions. Techniques include:

Style transfer in latent space:Apply style transformations to spatial channels while keeping temporal channels fixed. This enables changing the visual style of a video while maintaining its motion dynamics.

Color and lighting adjustment:Identify latent dimensions corresponding to color and lighting, then manipulate these dimensions to adjust the video's appearance without affecting motion.

Texture and detail control:Fine-grained appearance features can be modified by targeting specific frequency bands or spatial scales in the latent representation.

Key Insight: Disentanglement Quality

The degree of motion-appearance disentanglement varies across different video diffusion architectures. Models with explicit temporal attention mechanisms and separate spatial-temporal processing pathways typically exhibit better disentanglement, enabling more precise control over video generation.

Practical Applications for Controllable Generation

Understanding and manipulating latent representations opens up numerous practical applications for researchers and practitioners working with video diffusion models. These applications span from creative video editing to scientific visualization and data augmentation.

Semantic Video Editing

Latent space manipulation enables intuitive semantic editing of generated videos. By identifying directions in latent space corresponding to specific attributes (e.g., "add motion blur," "increase brightness," "change weather"), users can edit videos through simple vector arithmetic:

This approach has been successfully applied to tasks such as object insertion/removal, scene composition adjustment, and temporal dynamics modification. The key advantage is that edits remain consistent across frames, maintaining temporal coherence.

Motion Transfer and Retargeting

By separating motion and appearance information in latent space, researchers can transfer motion patterns from one video to another. This enables applications such as:

- Applying the motion of one video to the appearance of another

- Retargeting motion to different object types or scales

- Creating variations of a video with different motion dynamics

- Generating training data for action recognition systems

Conditional Generation with Latent Guidance

Latent representations can serve as powerful conditioning signals for video generation. By manipulating specific regions or dimensions of the latent space, researchers can guide the generation process toward desired outcomes:

Spatial conditioning:Modify spatial regions of latent representations to control where specific objects or features appear in generated videos.

Temporal conditioning:Adjust temporal components to control the timing and dynamics of events in generated videos.

Multi-modal conditioning:Combine latent manipulation with other conditioning signals (text, images, audio) for fine-grained control over generation.

Video Interpolation and Frame Synthesis

Latent space interpolation enables high-quality video interpolation and frame synthesis. By interpolating between latent representations of keyframes, models can generate smooth intermediate frames that maintain temporal consistency and visual quality.

This application is particularly valuable for:

- Increasing video frame rates for smoother playback

- Creating slow-motion effects from standard frame rate videos

- Filling in missing or corrupted frames in video sequences

- Generating transition sequences between different video clips

Implementation Example

When implementing latent interpolation for frame synthesis, consider using a combination of SLERP for global structure and LERP for fine details. This hybrid approach often produces superior results compared to using either method alone. Additionally, applying temporal smoothing filters to interpolated latents can further improve temporal consistency.

Advanced Techniques and Future Directions

The field of latent space manipulation in video diffusion models continues to evolve rapidly, with new techniques and applications emerging regularly. Several advanced approaches show particular promise for future research and development.

Learned Latent Manipulation

Rather than manually identifying semantic directions in latent space, recent work has explored learning manipulation functions directly from data. These approaches train neural networks to predict optimal latent modifications for achieving specific editing goals, potentially discovering non-obvious manipulation strategies that outperform hand-crafted methods.

Hierarchical Latent Spaces

Multi-scale latent representations enable more flexible control over video generation at different levels of abstraction. Coarse-scale latents control high-level scene composition and motion, while fine-scale latents handle details and textures. This hierarchical organization facilitates more intuitive and powerful editing capabilities.

Latent Space Regularization

Improving the structure and organization of latent spaces through specialized training objectives can enhance controllability and interpretability. Techniques such as disentanglement losses, orthogonality constraints, and semantic alignment objectives help create more well-organized latent spaces that are easier to manipulate and understand.

Cross-Modal Latent Spaces

Extending latent space manipulation to incorporate multiple modalities (text, audio, 3D geometry) opens new possibilities for controllable generation. Unified latent spaces that bridge different modalities enable applications such as text-guided video editing, audio-synchronized video generation, and 3D-aware video synthesis.

Conclusion

Understanding and manipulating latent representations in video diffusion models represents a crucial frontier in controllable video generation research. The techniques and approaches discussed in this guide—from dimensionality reduction and interpolation methods to motion-appearance disentanglement and practical applications—provide researchers with powerful tools for exploring and leveraging these complex high-dimensional spaces.

As video diffusion models continue to advance, the ability to precisely control and manipulate latent representations will become increasingly important for both research and practical applications. The field offers rich opportunities for innovation, from developing new manipulation techniques to discovering novel applications that leverage the unique properties of video latent spaces.

For researchers and practitioners working in this area, the key to success lies in combining theoretical understanding of latent space properties with practical experimentation and iterative refinement. By systematically exploring latent space structure, testing different manipulation strategies, and carefully evaluating results, we can continue to push the boundaries of what's possible with controllable video generation.

Key Takeaways

- Latent representations provide efficient, semantically meaningful encodings of video content that enable powerful manipulation capabilities

- Dimensionality reduction techniques like PCA and UMAP reveal the structure and organization of video latent spaces

- Interpolation methods ranging from simple LERP to sophisticated geodesic approaches enable smooth transitions between video concepts

- Motion and appearance information are often naturally disentangled in latent space, enabling independent control over these attributes

- Practical applications span semantic editing, motion transfer, conditional generation, and frame synthesis

- Advanced techniques including learned manipulation, hierarchical representations, and cross-modal latent spaces point toward exciting future directions

The journey of understanding video diffusion latent spaces is ongoing, with new discoveries and techniques emerging regularly. By building on the foundations laid out in this guide and staying engaged with the latest research developments, practitioners can continue to unlock new capabilities and applications for controllable video generation.